Katrin Köppert takes a new look at the explainability of AI systems, through a queer perspective. Queersplaining means not only explaining AI, but also revealing the inherent contradictions and power structures. An explainability that does not support the illusion of a universal solution, but recognises the complexity and context dependency of AI.

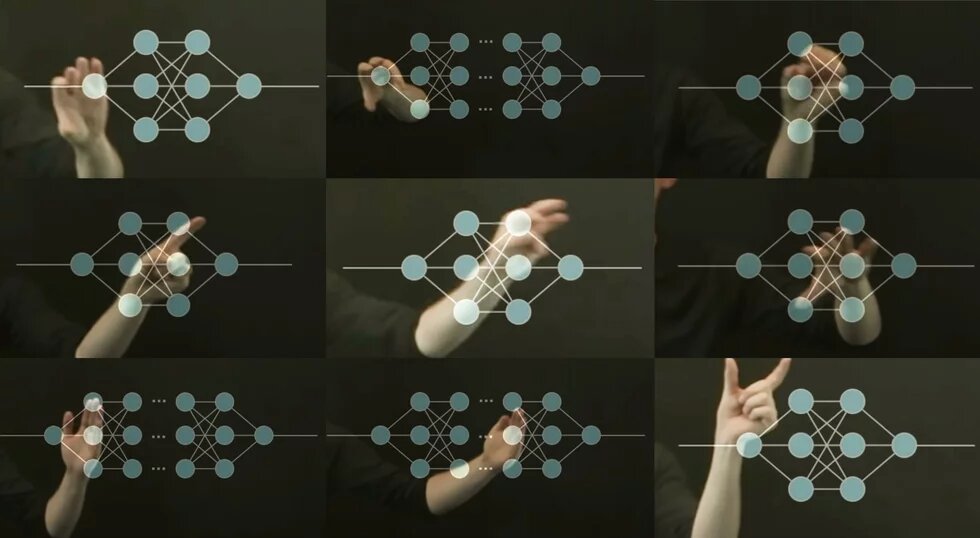

Despite the corporate and sometimes politically fuelled hype surrounding Artificial Intelligence, "lack of trust is one of the main reasons for the lack of acceptance of AI systems". In addition to the lack of a focus on the common good, the so-called black box algorithms are also to blame. How neural network algorithms receive and correlate millions of data points is almost incomprehensible to users, and even to programmers and data scientists. The processes are beyond interpretation and can no longer be explained. As a result of this development and to ensure responsible handling, criteria are being defined that focus on transparency and plausibility, or on explainability. For users to be able to interact with AI in a self-determined way, the systems must be understandable enough to enable them to be explained. Explainability as a keyword or concept for the ethical use of AI is not self-explanatory and in turn requires critical classification. I would like to do this briefly under the heading of "queersplaining”. Queersplaining is not intended to be a different or morally better way of mansplaining but, rather, an attempt to look at explainability from a queer perspective. But before reading explainability in a queer way, a few words first on the question of the possibility of queer AI or the queering of technology.

Queer/ing Technology

We can understand queer as a movement of crossing gender identities that are claimed to be stable and unchangeable. Queer is therefore directed against naturalising and unambiguous statements about gender. In terms of technology, this means that, with this movement, we are dealing with a practice of re-imagination aimed at ambiguity, plurality and transformability (Klipphahn-Karge et al. 2022). Naturalised views of gender, which are reflected in technological artefacts, are broken up or rendered implausible on the basis of this practice: The fact that AI speaks to us as a female voice in the form of voice assistance systems is not self-explanatory but is, from an intersectional gender-theoretical perspective, the effect of a long popular cultural history in which fears of civilization were associated with the female gender. Take Fritz Lang's film Metropolis, for example. Against the backdrop of a loss of white male authority due to mechanisation and industrialisation at the beginning of the 20th century, the robot was disciplined in the form of a woman while, at the same time, the 'New Woman', who was becoming emancipated at the time, was – by being linked with Black femininity – subdued by the association of the robot as a 'slave' (Chude-Sokei 2016).

Queering technology means questioning such plausibilities as the female gender of language assistance systems and assuming that they are not self-explanatory, especially if these plausibilities are associated with repression or discrimination as a result of stereotyping.

Not taking the self-evident for granted harbours the potential for reinterpretation and presents technology as something that is not fixed and is open to change. Conversely, technology in the understanding of its indeterminacy could be claimed to be queer – at least in the sense that, like gender, it cannot claim objective universality. Technology develops in context and is dependent on its discourses and applications. So the problem is not so much the technology as such, which, as I have just tried to convey, is a relation of indeterminacy. The problem is more the context. What is interesting here is that the current context itself works with the idea of technology as being flexible, indeterminate, and to a certain extent queer, affirmative. This not only complicates the issue of how to respond to this from a queer perspective of theory building, but also what queer networks and AI policies could look like.

Confusing AI myths

The fact that technology is indeterminate can be seen simply by looking at the explosion of terms that we are confronted with in current discussions about AI: The talk is of algorithms, voice assistance systems, robotics, wearables, deep learning, and so on. The diversity of terminology makes artificial intelligence appear vague and ambiguous and corresponds to a discourse on AI that is based more on fantasy than on the reality of technological operations. We are therefore dealing with myths that not only determine the present, but also narrow the space of the future: The best example is Elon Musk's repeated claim that there could be self-driving cars in the future (Kraher 2024). Asserting that this will be the case is tantamount to myth-making; Myth-making, however, which determines in the present what will be considered socially intelligible in the future: that there will still be cars, that we are to continue to use toxic energy in the form of batteries to make cars, etc.

What is problematic about creating such myths is, on the one hand, that fantasy here represents a male apostrophised fantasy of omnipotence, i.e. the idea that technology is the solution to a problem that is, mind you, imaginary, i.e. a problem that only exists because man/Musk wants it to and not because it really is a problem. Because who would actually want to be transported by a self-driving car would still have to be determined against the backdrop of a long-standing tradition of freedom being embodied by driving a car oneself. However, it is also problematic that the fantasies of what is technically possible cement a very specific concept of technology and, in this respect, are a tactic of concealing what AI could also be (oriented towards the common good, ecological). Fantastic AI narratives and AI myths function to a certain extent as a mirror image of the black boxes. Phantasmatic fantasies thus become part of the problem of obscuring the specificity of the context in which AI is mostly thought of in terms of profit, military power and surveillance. Equivocation – i.e. what we associate with queer ambiguity – becomes the catalyst for a hegemonic understanding of AI, that is an understanding based on effectiveness, feasibility and power.

Conversely, what does this mean for a queer theorization of AI which, as I mentioned at the beginning, would like to represent a movement that is geared towards ambiguity and indeterminacy? Because in response to ambiguity as an instrument of domination, it could be said that greater precision is needed when talking about AI, more precise explanations in order to filter AI myths as engines of gender-specific, racist, ableist discrimination through technology. So is explainability necessary, or is explainability an instrument of queer online politics?

Explainability

The desire for explainability is quite understandable in view of the damage caused by the tech companies' fantasies on the one hand and the black boxes on the other. However, as Nishant Shah explains in his article Refusing Platform Promises, the demand for effective transparency and explainability is not a break with the promises made by companies and is not an effective challenge to what is considered to be the context for the fantasies: Explaining what decisions are made in the design of AI systems leaves intact the idea of considering AI as a solution to societal, social problems. The development of AI systems that deliver explainable results does not eliminate AI as a dream machine; in other words, AI in the function of an unconscious that directly links the technological to the capitalist flow. Explainability with the understanding of making AI more accessible, as stated in the 'white paper’ on Ethics briefing. Guidelines for the responsible development and use of AI systems, meaning that users know when they are interacting with an AI system and how it works, does not call into question the usefulness of such a company producing self-driving cars in the bigger picture of the climate crisis, extractivism and machismo.

So what would explainability be from a queer perspective: What is queersplaining? With this, I do not mean the denial of explainability per se, although I can also understand Nasma Ahmed when they says about racist facial recognition technology: I don't just think about refusal, I think about absolute shutdown. We don't need a restart. The technology should not exist at all.” (Sharma and Singh 2022, 182) I share Ahmed's rejection of an almost naïve concept of diversity that reacts to algorithmic discrimination, which to a certain extent forms the background of the approaches to explainability described above: Explainability as a vehicle for the participation of everyone is a problem, at the latest when we realise that "AI for all" contributes to extractive logics, i.e. those logics that spread the ecological impact of huge data servers and amounts of energy unfairly and will affect racialised people of the global majority in a disadvantageous, not to say existential manner.

Queersplaining can therefore only mean one thing for the time being: Explaining by way of the contradictions associated with AI. That means explaining without trying to resolve contradictions. On the contrary: Explaining in order to bring the contradictions into focus. With the contradiction of thinking, we are forced to think of AI as something very local, very temporary, very context-dependent. Queersplaining is therefore first and foremost just the modest desire not to confuse explainability with universalising and objectifying talk about AI but, rather, to connect it with the inevitability of contradictions. The resulting slogan will certainly not be "AI for all" but, rather, "AI for some, perhaps in one place or other.

This commentary follows up on the event "AI for All: Gender Political Perspectives on Artificial Intelligence," which took place on April 19, 2024.

For a more in-depth discussion of queer-feminist, intersectional approaches to explainability, please refer to the workshop "After Explainability. AI Metaphors and Materialisations Beyond Transparency". 17-18 June 2024, Organised by: Participatory IT Design research group at the University of Kassel and DFG research network Gender, Media and Affect. Contact: Goda Klumbytė, goda.klumbyte@uni-kassel.de

The views and opinions in this article do not necessarily reflect those of the Heinrich-Böll-Stiftung European Union.

Dieser Artikel erschien zuerst hier: eu.boell.org