Artificial Intelligence, or "AI" means different things to different people, but they all concern the way women and minorities relate to technology, and how those technologies adapt to us — or don’t.

Our current relationship to AI is reminiscent of how the publishing industry used to sell books to women; romance novels, in particular. The formula was simple: put a woman in the path of an elite, mysterious professional and have her be swept off of her feet.

Selling Romance

These mystery men — surgeons, pilots, lawyers — were mostly inaccessible to women. Styled as dashing, charming and chivalrous, few women could connect with such sought-after specimens outside of escapist romance novels. Small wonder that stewardess and secretarial roles became sought after jobs. Proximity to old boy’s clubs, where husband material could be found, was in high demand.

Until it wasn’t. The 1960s brought book publishers an audience of women trailblazers on the work front. These emancipated women now had access to elite professions — as well as to the men who used to hold these roles.

Readers bored by “tall, dark, and handsome” hero tropes needed something different from office-worker romances.

Romances about strong men in elite professions lost appeal when their mystery evaporated.

Build a Better Idol

The threat of potentially unsold books spurred publishers into action. What did they do? They invented the new romance genre, “paranormal romance.”

Featuring vampires, werewolves, vikings, and gods, the genre was marketed as an escape from regular human men’s bad jokes, bad breath or bad posture.

“Paranormal romance” became a $100+ million annual grossing genre that helped the publishing industry dazzle its audience, without changing much.

This razzle-dazzle marketing technique is well-known to those of us who work in tech.

We’ve repeatedly gotten the narrative of the tech industry as an elite sector, shrouded in secrecy.

We’ve seen the parade of tech vanguards and visionaries, and above all, “geniuses” who are usually one “type” of men.

But as with the downturn in traditional romances, once women got the lay of the land in tech, they started a wave of whistle-blowing that extended to tech's venture-capital-industry shadow.

Instead of books trying to sell a tired fantasy, the tech industry has billion-dollar IPOs on the line. Its new hero is a technology that’s promoted as de-emphasizing human behaviours: it's called Artificial Intelligence.

AI does for the tech industry what the “paranormal romance” genre did for romance booksellers.

AI is hyped as being purely data-driven. In other words, it’s as far from error-prone human behavior as a vampire is from a dorky office-mate.

Tech investors have embraced AI-driven everything. That means products, services, and systems are being rolled out globally to show this infatuation. But AI is not limited to experimental branches of technology. It’s touching many branches of everyday life in ways that we might not want or enjoy.

It’s probably time to look at AI as if it’s an actual, intimate relationship we’re in. I’m a romantic, but AI algorithms have gone in the direction of an abusive relationship — minus the billionaire, the helicopter rides, and the safe word.

Artificial Intelligence, the “Paranormal Romance” of Tech

Facial recognition technology — a cornerstone of AI — has already had outsize effects on people’s lives. For those who are paying attention, AI is already showing its bad breath and poor manners.

Just look at the FaceID technology on the iPhoneX.

Many were delighted that this lets you unlock and access call records, emails, and texts on your phone. However, among those not delighted were some iPhoneX users in China.

It’s all fun and games until your boss or co-worker is able to unlock your mobile phone.

Alternatively, let’s look at, say, Black people. They may avoid the iPhoneX, but many may not be able to avoid surveillance cameras using AI that performs consistently poorly on their group.

“Consistently poor” performance may mean police arresting you in error because their AI software misidentifies Black and other dark-skinned men, women and children.

What if these failures don’t stop police from adopting these AI wholesale?

Without accuracy guidelines, AI algorithms do not function equally well on you as on me.

If AI is a mirror of the past (and of its makers’ blind spots), we should care about how we may be stigmatized, stereotyped or harmed by AI algorithms.

No accuracy guidelines is why Amazon’s “AI-driven” hiring algorithm had to be scrapped after it was found to exclude women from the hiring pipeline — just for being women.

Men were not negatively affected by the Amazon AI hiring algorithm, and for some reason, no one noticed bias until after it affected potential candidates.

These aren’t just far-off examples. In just the past year, I had a jarring run-in with AI algorithms, too. Promised a pre-approved credit line, I submitted a driver license scan, a passport photo and a “selfie” to a major credit card issuer— only to be told that I “could not be verified” for some reason.

No alternatives, in case of dysfunctional AI were in place. My driver license scan, passport information and selfie photo? Gone. All I had after giving my data over to some AI system was representatives who were unable to explain what their AI algorithms did or why it hadn’t worked.

"AI is trying to decide if you get a job, AI is deciding if you get access to medical treatment — and if we get that wrong, you have real world consequences.” — Joy Buolamwini, founder Algorithmic Justice League

We should speak up about who may use our data to get closer to us than we want them to. In addition to questioning how our data is used, we can also:

1. Demystify technology.

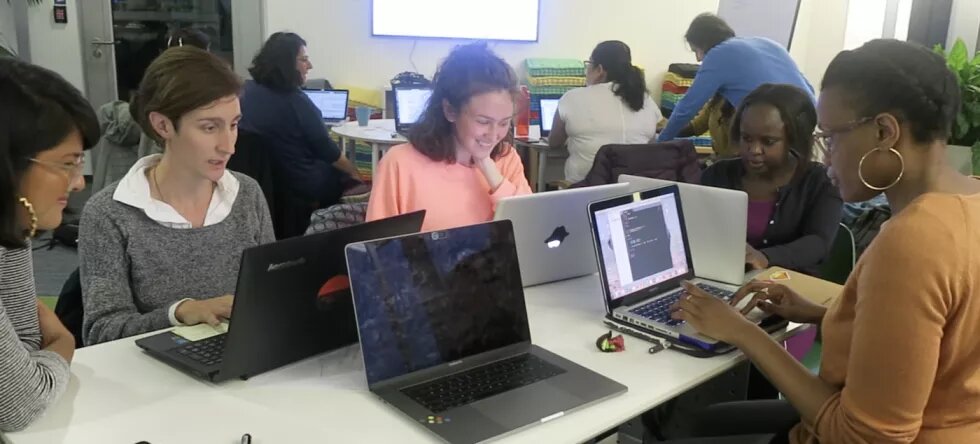

I started a non-profit organization, FrauenLoop, in Berlin, with the explicit goal of demystifying technology for women. Starting with a web development course, we expanded into data analysis, and then to software testing, machine learning, up to introduction to neural networks — that is what unsupervised computer learning, or Artificial Intelligence — is built upon.

2. Diversify Technology.

Lack of diverse and global datasets is why FaceID couldn‘t tell the difference between one Asian person and another. It’s why automated cars and some financial verification algorithms don’t always recognise dark complexions.

It’s also one reason why Amazon’s hiring algorithm excluded all women when asked to choose between any man and one of them.

3. Demand Transparency.

We should question the role of AI algorithms in our selection for jobs, healthcare, promotions or financial products. Were they trained by someone who did not consider nor care about data for your demographic?

When AI fails to properly evaluate my data, that’s my problem. But when it fails to recognize the ageing, the overweight, the sun-tanned or the curly-haired, then my problem could quickly become yours.

Every Hero Needs a Code (of Conduct)

We don’t all need retraining as data scientists; we just need to ensure that AI algorithms don’t become default decision-makers while their predictions and recommendations go un-questioned and barely understood.

You wouldn’t believe that the vampire from the “Twilight” novels is real and able to choose the best college candidates — but entrusting AI algorithms with key decisions without an error-checking process is basically the same thing.

There is no such thing as bias-free, human-independent technology and the sooner we acknowledge this, the less likely we are to get punk’d by an algorithm.

Creating ethical, privacy, and fair-use guidelines for AI products and services is critical. And we should know better than to swoon like old-school romance readers over “super-human” AI. Ask any paranormal romance fan and they will tell you that underneath every type of hero there is, usually, a regular guy.

Many tech educators are fighting back against the romanticization of AI — these organizations are ringing the alarm:

For more info on tech education for women, made in Berlin, check out frauenloop.org